New York University physicists have created new techniques that deploy machine learning as a means to significantly improve data analysis for the Large Hadron Collider (LHC), the world’s most powerful particle accelerator.

“The methods we developed greatly enhance our discovery potential for new physics at the LHC,” says Kyle Cranmer, a professor of physics and the senior author of the paper, which appears in the journal Physical Review Letters.

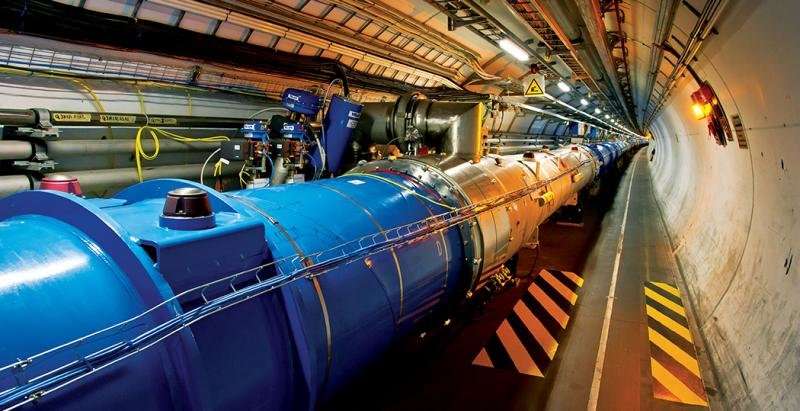

Located at the CERN laboratory near Geneva, Switzerland, the LHC is probing a new frontier in high-energy physics and may reveal the origin of mass of fundamental particles, the source of the illusive dark matter that fills the universe, and even extra dimensions of space.

In 2012, data collected by the LHC backed the existence of the Higgs boson, a sub-atomic particle that plays a key role in our understanding of the universe. The following year, Peter Higgs and François Englert received the Nobel Prize in Physics in recognition of their work in developing the theory of what is now known as the Higgs field, which gives elementary particles mass.

NYU researchers, including Cranmer, had searched for evidence of the Higgs boson using data collected by the LHC, developed statistical tools and methodology used to claim the discovery and performed measurements of the new particle establishing that it was indeed the Higgs boson.

The new methods outlined in the Physical Review Letters paper offer the possibility for additional, pioneering discoveries.

“In many areas of science, simulations provide the best descriptions of a complicated phenomenon, but they are difficult to use in the context of data analysis,” explains Cranmer, also a faculty member at NYU’s Center for Data Science. “The techniques we’ve developed build a bridge allowing us to exploit these very accurate simulations in the context of data analysis.”

In physics, this challenge is often a daunting one.

For instance, Cranmer notes, it is straightforward to make a simulation of the break in a game of billiards with balls bouncing off each other and the rails. However, it is much more difficult to look at the final position of the balls to infer how hard and at what angle the que ball was initially struck.

“While we often think of pencils and papers or blackboard full of equations, modern physics often requires detailed computer simulations,” he adds. “These simulations can be very accurate, but they don’t immediately provide a way to analyze the data.

“Machine learning excels at gleaning patterns in data, and this capability can be used to summarize simulated data providing the modern-day equivalent to a black board full of equations.”

The paper’s other authors are: Johann Brehmer, a postdoctoral fellow at NYU’s Center for Data Science, Gilles Louppe, a Moore-Sloan Data Science fellow at NYU at the time of the research and now professor at Belgium’s University of Liège, and Juan Pavez, a doctoral student at Chile’s Santa Maria University.

“The revolution in artificial intelligence is leading to breakthroughs in science,” observes Cranmer. “Multidisciplinary teams—like this one that brought together physics, data science, and computer science—are making that happen.”