In a self-congratulatory move, Microsoft announced some major improvements today to its fundamentally biased facial recognition software. The Azure-based Face API was criticized in a research paper earlier this year for its error rate—as high as 20.8 percent—when attempting to identify the gender of people of color, particularly women with darker skin tones. In contrast, Microsoft’s AI was able to identify the gender of photos of “lighter male faces” with an error rate of zero percent, the study concluded.

Like other companies developing face recognition tech, Microsoft didn’t have enough images of black and brown people, and it showed in its recognition test results. Microsoft’s blog post today puts the onus primarily on the data it used when building the facial recognition software, stating that such technologies are “only as good as the data used to train them.” Considering the predicament, the most obvious fix was a new dataset containing more images of brown people, which Microsoft used.

“The Face API team made three major changes. They expanded and revised training and benchmark datasets, launched new data collection efforts to further improve the training data by focusing specifically on skin tone, gender and age, and improved the classifier to produce higher precision results.” With the latest batch of improvements, Microsoft said it was able to reduce the error rates for men and women with darker skin by up to 20 times. For all women, the company said the error rates were reduced by nine times.

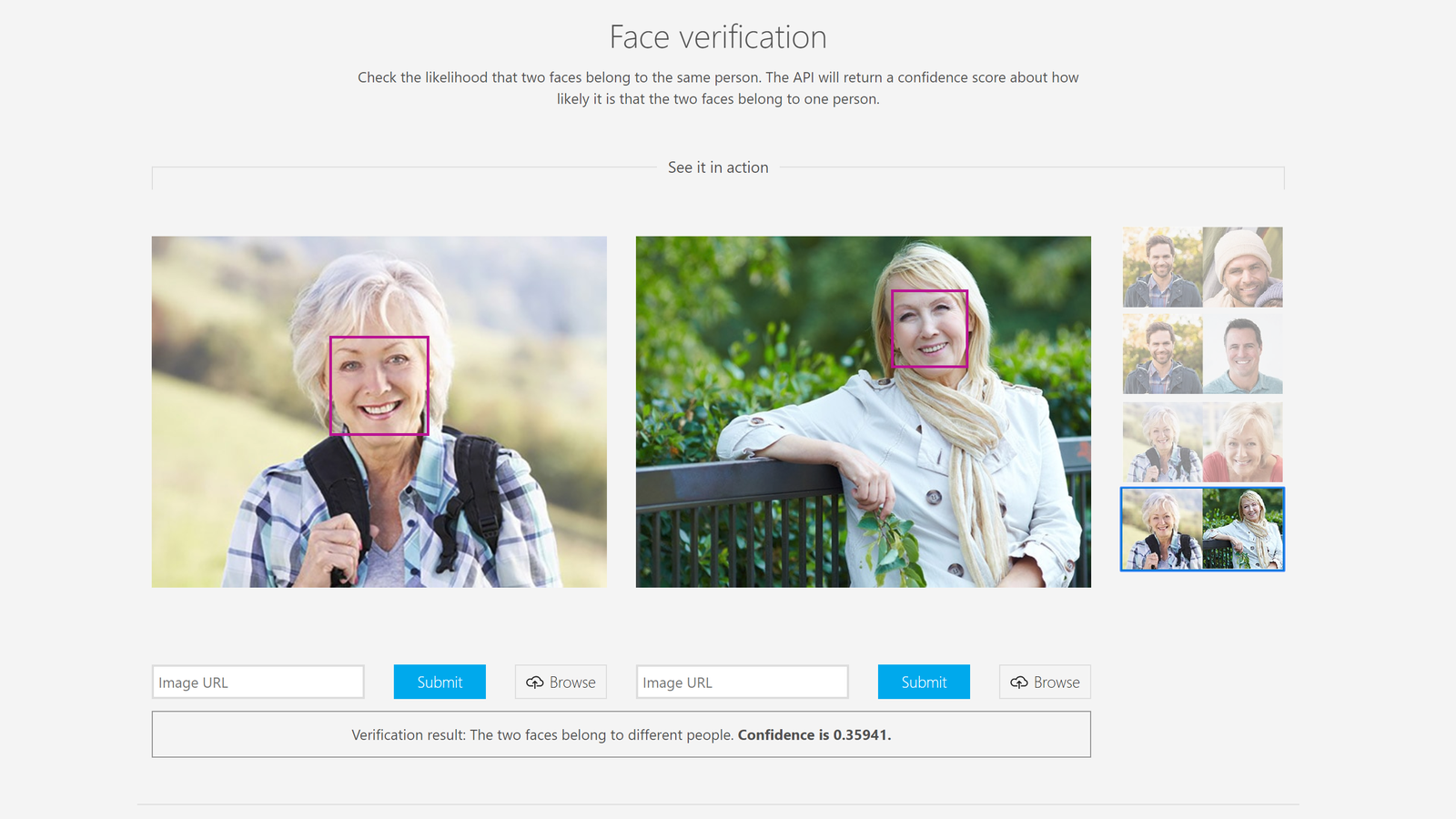

A quick glance at Microsoft’s Face API page gives you a clue as to why Microsoft’s recognition software might not be so great at identifying darker faces:

In the blog post, Microsoft senior researcher Hanna Wallach touched on one of the industry’s broader failings, noting how data generated by a biased society would lead to biased results when it came to training machine learning systems. “We had conversations about different ways to detect bias and operationalize fairness,” Wallach said. “We talked about data collection efforts to diversify the training data. We talked about different strategies to internally test our systems before we deploy them.”

The failure here was never solely that the tech didn’t work properly for anyone who wasn’t white and male. Likewise, the problems don’t end with Microsoft getting really good at identifying and gendering black and brown people.

In January, Microsoft stated that ICE would be using its Azure Government Cloud service, in part to “process data on edge devices or utilize deep learning capabilities to accelerate facial recognition and identification.” The announcement has so far led to employees asking the company to cancel its contract with the government agency.

Even if face recognition tech becomes less biased, it can still be weaponized against people of color. On Monday, the CEO of face recognition startup Kairos, Brian Brackeen, penned an op-ed explaining how dangerous the technology can be in the hands of the government and police.