Imagine that you’re living in some dystopian future, and you have been accused of being an advanced AI, which is outlawed in this society. The penalty is death, and in order to convince the judge who will decide your fate, you can utter just one word, any word you like from the dictionary, to prove that you’re flesh and blood. What word do you choose?

It sounds like the setup for a cheesy sci-fi short, but this is actually part of a curious paper from a pair of researchers at MIT on something they call the “Minimal Turing Test.”

Instead of a machine trying to convince someone they’re human through conversation — which was the premise of the original Turing Test, outlined by British scientist Alan Turing in his seminal 1950 paper “Computing Machinery and Intelligence” — the Minimal Turing Test asks for just one word, either chosen completely freely or picked from a pair of words.

The researchers responsible, John McCoy and Tomer Ullman, clarify that the Minimal Turing Test isn’t a benchmark for AI progress, but a way of probing how humans see themselves in relation to machines. This question is going to become increasingly relevant in a world filled with AI assistants, deepfaked humans, and Google auto reply handling your email. In a world of human-like AI, what do we think sets us apart? What makes us different?

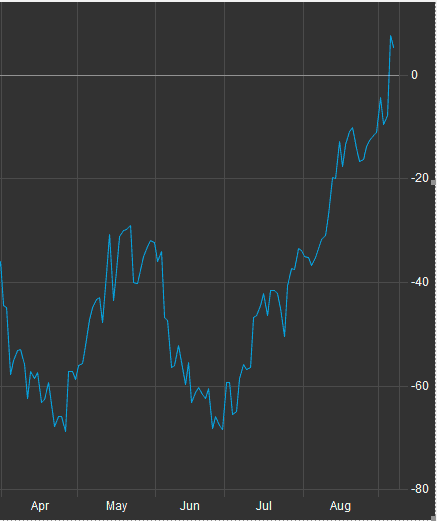

In the first of McCoy and Ullman’s two tests, 936 participants were asked to select any word they liked that they thought could be proof of their humanity. Despite the free range of choices, results clustered around a small number of themes. The four most frequently picked words were “love” (134 answers), “compassion” (33 answers), “human” (30 answers), and “please” (25 answers), which made up a quarter of all responses. Other clusters were empathy (words like “emotion,” “feelings,” and “sympathy”), and faith and forgiveness (words like “mercy,” “hope,” and “god”).

All in all, the 936 answers covered 428 individual words, which is a striking amount of cohesion.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/13220085/Screen_Shot_2018_10_05_at_12.24.40_PM.png)

n the second test, 2,405 participants had to choose between pairs of words, deciding which of the two they thought was given by a human and a machine. Again, words like “love,” “human,” and “please” scored strongly, but the winning word was simpler and distinctly biological: “poop.” Yes, out of all of the word pairings, “poop” was selected most frequently to denote the very essence and soul of humanity. Poop.

Speaking to The Verge, McCoy of MIT’s Sloan Neuroeconomics Laboratory, stressed that the test was more about social psychology than computer science.

“We don’t see it being used as the next CAPTCHA,” McCoy says. “The practical applications it has in the AI computer space is more when you’re thinking about user interface design and things like that. In those contexts, it’s perhaps useful to know how people think about computers and what they think sets them apart.”

This makes sense, as even the original Turing Test has long fallen out of favor with computer scientists as a test of machine intelligence. Critics say that it tests the ability of programmers to find conversational hacks that can trick humans more than intelligence.

For example, in 2014, news coverage pronounced that the Turing Test had been passed by a chatbot. The programmers tricked judges by having their bot identify itself as a 13-year-old Ukrainian boy named Eugene Goostman. This provided the perfect cover for the bot’s many mistakes and its inability to answer certain questions. As critics like computer scientist Gary Marcus noted, “What Goostman’s victory really reveals … is not the advent of SkyNet or cyborg culture but rather the ease with which we can fool others.”

But this isn’t to say that the Turing Test is useless. Creating computer programs that can chat convincingly is a fruitful challenge for AI researchers that may benefit humanity. The test is also still a fantastic thought experiment that can help us explore complex questions surrounding our understanding of intelligence. We can also modify it to sharpen its focus by asking computers not to simply chat, but to answer queries that require a nuanced and rich understanding of the world. (One example is asking a computer, “What are the plurals of ‘platch’ and ‘snorp’?” A human would probably answer “platches” and “snorps,” despite the fact that these words are nonsense and can’t be found in a dictionary.)

It’s in this framework that the Minimal Turing Test is best appreciated as a thought experiment, not a benchmark for AI progress. McCoy says what surprised him most about the research was just how much creativity there was in the answers. “People came up with all sorts of interesting shibboleths and puns,” he says, with words like “bootylicious” “supercalifragilisticexpialidocious.” (Try spelling that without Google.)

“It tells you something about the gap between humans and smart robots,” says McCoy, “that people who have never had to think about this situation before came up with a lot smart and funny results.” It’s something, in other words, that a computer would struggle with.