Using Photoshop and other image manipulation software to tweak faces in photos has become common practice, but it’s not always made clear when it’s been done. Berkeley and Adobe researchers have created a tool that not only can tell when a face has been Photoshopped, but can suggest how to undo it.

Right off the bat it must be noted that this project applies only to Photoshop manipulations, and in particular those made with the “Face Aware Liquify” feature, which allows for both subtle and major adjustments to many facial features. A universal detection tool is a long way off, but this is a start.

The researchers (among them Alexei Efros, who just appeared at our AI+Robotics event) began from the assumption that a great deal of image manipulation is performed with popular tools like Adobe’s, and as such a good place to start would be looking specifically at the manipulations possible in those tools.

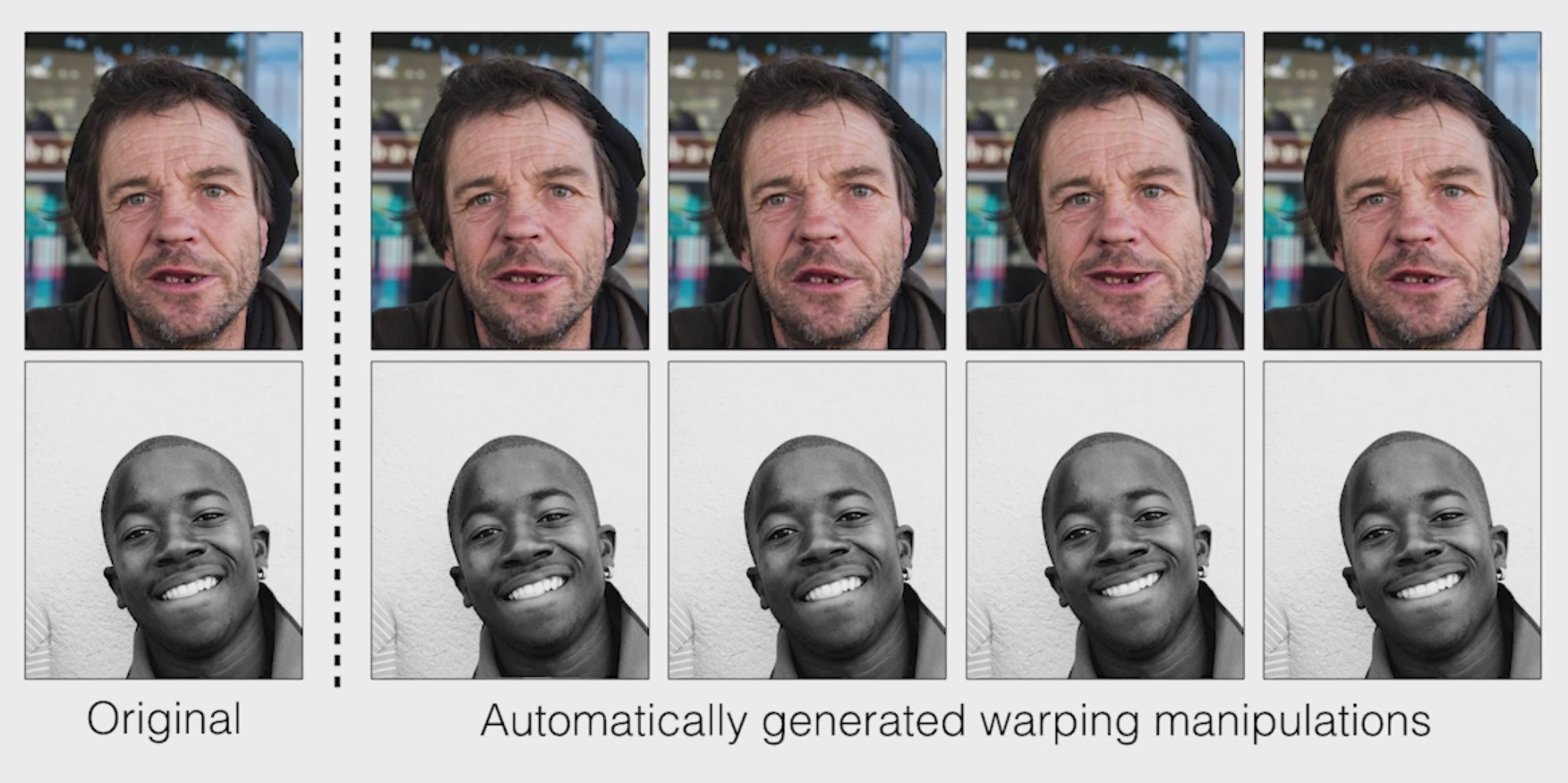

They set up a script to take portrait photos and manipulate them slightly in various ways: move the eyes a bit and emphasize the smile, narrow the cheeks and nose, things like that. They then fed the originals and warped versions to the machine learning model en masse, with the hopes that it would learn to tell them apart.

Learn it did, and quite well. When humans were presented with images and asked which had been manipulated, they performed only slightly better than chance. But the trained neural network identified the manipulated images 99 percent of the time.

What is it seeing? Probably tiny patterns in the optical flow of the image that humans can’t really perceive. And those same little patterns also suggest to it what exact manipulations have been made, letting it suggest an “undo” of the manipulations even having never seen the original.

Since it’s limited to just faces tweaked by this Photoshop tool, don’t expect this research to form any significant barrier against the forces of evil lawlessly tweaking faces left and right out there. But this is just one of many small starts in the growing field of digital forensics.

“We live in a world where it’s becoming harder to trust the digital information we consume,” said Adobe’s Richard Zhang, who worked on the project, “and I look forward to further exploring this area of research.”

You can read the paper describing the project and inspect the team’s code at the project page.